Share this

Article

You are free to share this article under the Attribution 4.0 International license.

New research digs into how much alcohol hummingbirds consume.

Your backyard hummingbird feeder filled with sugar water is a natural experiment in fermentation—yeast settle in and turn some of the sugar into alcohol.

The same is true of nectar-filled flowers, which are an ideal gathering place for yeast—a type of fungus—and for bacteria that metabolize sugar and produce ethanol.

To biologist Robert Dudley, this raises a host of questions. How much alcohol do hummingbirds consume in their daily quest for sustenance? Are they attracted to alcohol or repelled by it? Since alcohol is a natural byproduct of the sugary fruit and floral nectar that plants produce, is ethanol an inevitable part of the diet of hummingbirds and many other animals?

“Hummingbirds are eating 80% of their body mass a day in nectar,” says Dudley, professor of integrative biology at University of California, Berkeley. “Most of it is water and the remainder sugar. But even if there are very low concentrations of ethanol, that volumetric consumption would yield a high dosage of ethanol, if it were out there. Maybe, with feeders, we’re not only [feeding] hummingbirds, we’re providing a seat at the bar every time they come in.”

During the worst of the COVID-19 pandemic, when it became difficult to test these questions in the wilds of Central America and Africa, where there are nectar-feeding sunbirds, he tasked several undergraduate students with experimenting on the hummers visiting the feeder outside his office window to find out whether alcohol in sugar water was a turn-off or a turn-on. All three of the test subjects were male Anna’s hummingbirds (Calypte anna), year-round residents of the Bay Area.

The results of that study, which appears in the journal Royal Society Open Science, demonstrate that hummingbirds happily sip from sugar water with up to 1% alcohol by volume, finding it just as attractive as plain sugar water.

They appear to be only moderate tipplers, however, because they sip only half as much as normal when the sugar water contains 2% alcohol.

“They’re consuming the same total amount of ethanol, they’re just reducing the volume of the ingested 2% solution. So that was really interesting,” Dudley says. “That was a kind of a threshold effect and suggested to us that whatever’s out there in the real world, it’s probably not exceeding 1.5%.”

When he and his colleagues tested the alcohol level in sugar water that had sat in the feeder for two weeks, they found a much lower concentration: about 0.05% by volume.

“Now, 0.05% just doesn’t sound like much, and it’s not. But again, if you’re eating 80% of your body weight a day, at .05% of ethanol you’re getting a substantial load of ethanol relative to your body mass,” he says. “So it’s all consistent with the idea that there’s a natural, chronic exposure to physiologically significant levels of ethanol derived from this nutritional source.”

“They burn the alcohol and metabolize it so quickly. Likewise with the sugars. So they’re probably not seeing any real effect. They’re not getting drunk,” he adds.

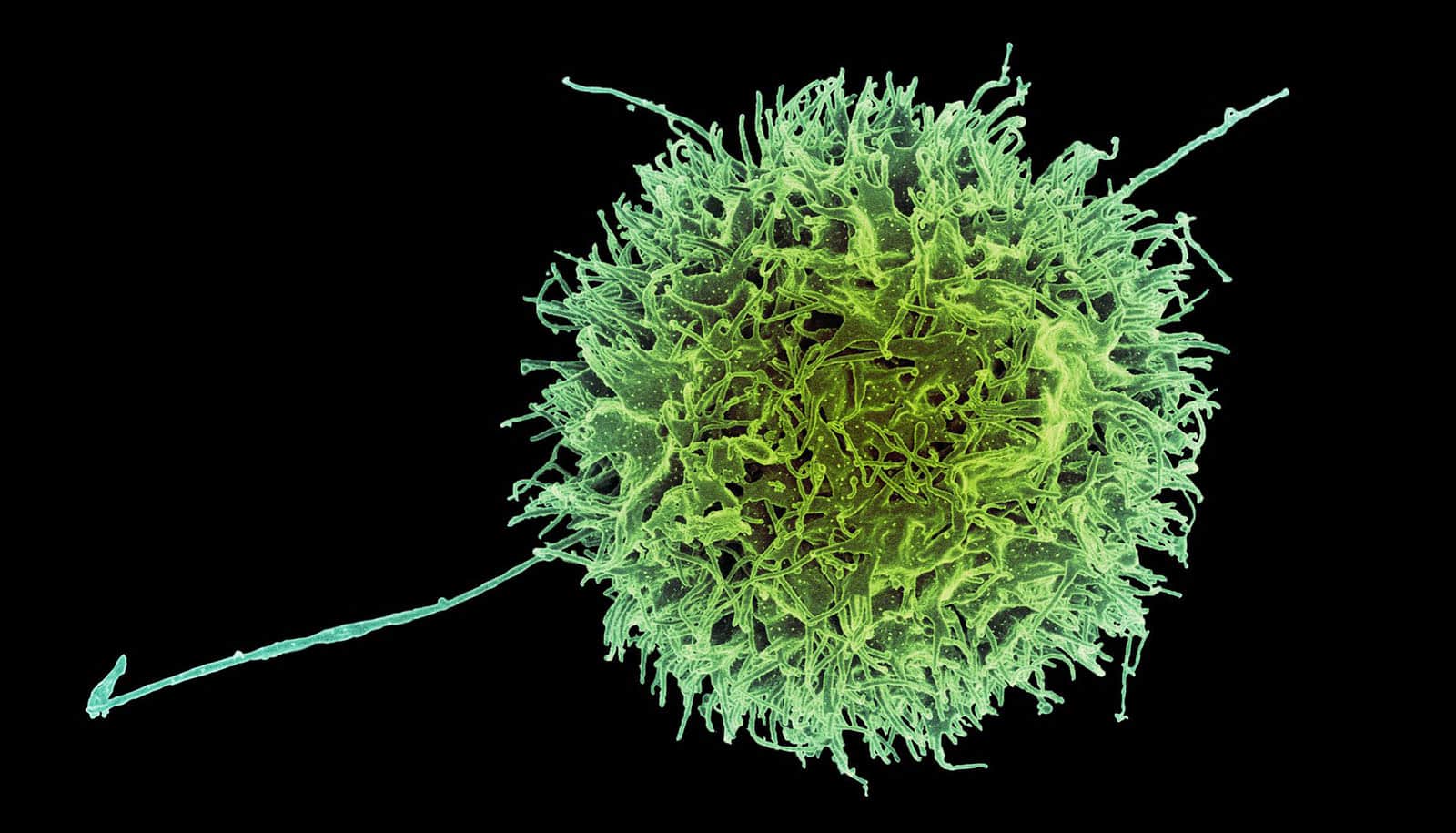

The research is part of a long-term project by Dudley and his colleagues—herpetologist Jim McGuire and bird expert Rauri Bowie, both professors of integrative biology and curators at UC Berkeley’s Museum of Vertebrate Zoology. They seek to understand the role that alcohol plays in animal diets, particularly in the tropics, where fruits and sugary nectar easily ferment, and alcohol cannot help but be consumed by fruit-eating or nectar-sipping animals.

“Does alcohol have any behavioral effect? Does it stimulate feeding at low levels? Does it motivate more frequent attendance of a flower if they get not just sugar, but also ethanol? I don’t have the answers to these questions. But that’s experimentally tractable,” he says.

Part of this project, funded by the National Science Foundation, involves testing the alcohol content of fruits in Africa and nectar in flowers in the UC Botanical Garden. No systematic studies of the alcohol content of fruits and nectars, or of alcohol consumption by nectar-sipping birds, insects, or mammals, or by fruit-eating animals—including primates—have been done.

But several isolated studies are suggestive. A 2008 study found that the nectar in palm flowers consumed by pen-tailed tree shrews, which are small, ratlike animals in West Malaysia, had levels of alcohol as high as 3.8% by volume. Another study, published in 2015, found a relatively high alcohol concentration—up to 3.8%—in the nectar eaten by the slow loris, a type of primate, and that both slow lorises and aye-ayes, another primate, preferred nectar with higher alcohol content.

The new study shows that birds are also likely consuming alcohol produced by natural fermentation.

“This is the first demonstration of ethanol consumption by birds, quote, in the wild. I’ll use that phrase cautiously because it’s a lab experiment and feeder measurement,” Dudley says. “But the linkage with the natural flowers is obvious. This just demonstrates that nectar-feeding birds, not just nectar-feeding mammals, not just fruit-eating animals, are all potentially exposed to ethanol as a natural part of their diet.”

The next step, he says, is to measure how much ethanol is naturally found in flowers and determine how frequently it’s being consumed by birds. He plans to extend his study to include Old World sunbirds and honey eaters in Australia, both of which occupy the nectar-sipping niche that hummingbirds have in America.

Dudley has been obsessed with alcohol use and misuse for years, and in his book, The Drunken Monkey: Why We Drink and Abuse Alcohol (University of California Press, 2014), presented evidence that humans’ attraction to alcohol is an evolutionary adaptation to improve survival among primates. Only with the coming of industrial alcohol production has our attraction turned, in many cases, into alcohol abuse.

“Why do humans drink alcohol at all, as opposed to vinegar or any of the other 10 million organic compounds out there? And why do most humans actually metabolize it, burn it, and use it pretty effectively, often in conjunction with food, but then some humans also consume to excess?” he asks.

“I think, to get a better understanding of human attraction to alcohol, we really have to have better animal model systems, but also a realization that the natural availability of ethanol is actually substantial, not just for primates that are feeding on fruit and nectar, but also for a whole bunch of other birds and mammals and insects that are also feeding on flowers and fruits,” he says. “The comparative biology of ethanol consumption may yield insight into modern day patterns of consumption and abuse by humans.”

This work received support from the National Science Foundation and UC Berkeley’s Undergraduate Research Apprentice Program.

Source: UC Berkeley