City lizards have parallel genomic markers when compared to neighboring forest lizards, a study finds.

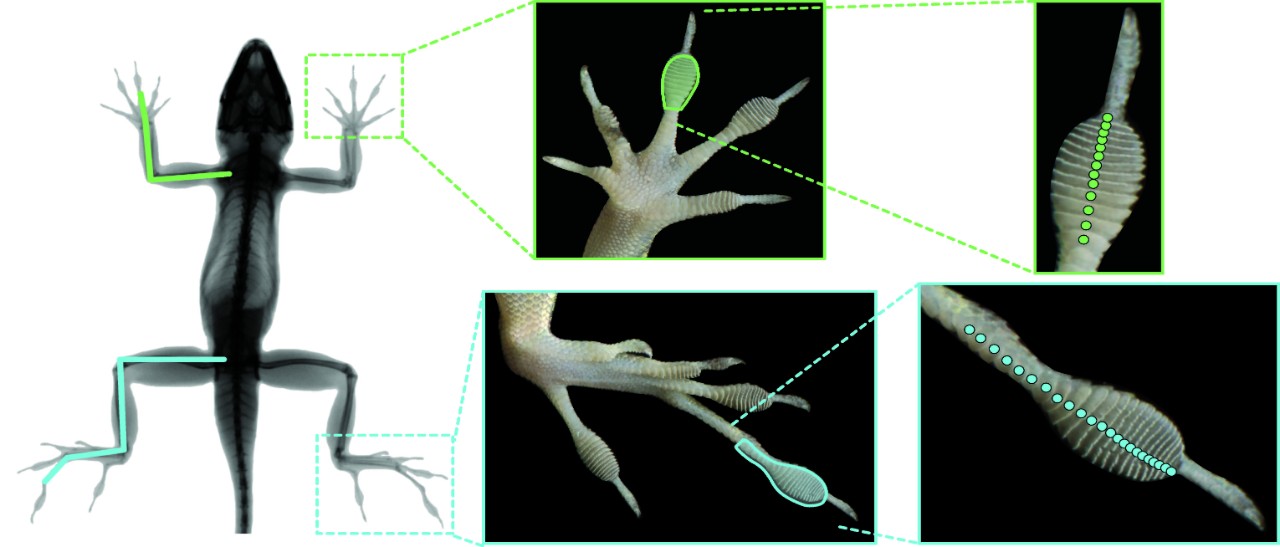

The genetic variations linked to urbanization underlie physical differences in the urban lizards including longer limbs and larger toepads that show how these lizards have evolved to adapt to city environments.

Urbanization has dramatically transformed landscapes around the world—changing how animals interact with nature, creating “heat islands” with higher temperatures, and hurting local biodiversity. Yet many organisms survive and even thrive in these urban environments, taking advantage of new types of habitat created by humans. Researchers studying evolutionary changes in urban species have found that some populations, for example, undergo metabolic changes from new diets or develop an increased tolerance of heat.

“Urbanization impacts roughly two-thirds of the Earth and is expected to continue to intensify, so it’s important to understand how organisms might be adapting to changing environments,” says Kristin Winchell, assistant professor of biology at New York University and the study’s first author.

“In many ways, cities provide us with natural laboratories for studying adaptive change, as we can compare urban populations with their non-urban counterparts to see how they respond to similar stressors and pressures over short periods of time.”

Anolis cristatellus lizards—a small-bodied species also known as the Puerto Rican crested anole—are common in both urban and forested areas of Puerto Rico. Prior studies by Winchell and her colleagues found that urban Anolis cristatellus have evolved certain traits to live in cities: they have larger toepads with more specialized scales that allow them to cling to smooth surfaces like walls and glass, and have longer limbs that help them sprint across open areas.

In the new study, the researchers looked at 96 Anolis cristatellus lizards from three regions of Puerto Rico—San Juan, Arecibo, and Mayagüez—comparing lizards living in urban centers with those living in forests surrounding each city. Their findings appear in PNAS.

They first confirmed that the lizard populations in the three regions were genetically distinct from one another, so any similarities they found among lizards across the three cities could be attributed to urbanization. They then measured their toepads and legs and found that urban lizards had significantly longer limbs and larger toepads with more specialized scales on their toes, supporting their earlier research that these traits have evolved to enable urban lizards to thrive in cities.

To understand the genetic basis of these trait differences, the researchers conducted several genomic analyses on exomic DNA, the regions of the genome that code for proteins. They identified a set of 33 genes found in three regions of the lizard genome that were repeatedly associated with urbanization across populations, including genes related to immune function and metabolism.

“While we need further analysis of these genes to really know what this finding means, we do have evidence that urban lizards get injured more and have more parasites, so changes to immune function and wound healing would make sense. Similarly, urban anoles eat human food, so it is possible that they could be experiencing changes to their metabolism,” says Winchell.

In an additional analysis, they found 93 genes in the urban lizards that are important for limb and skin development, offering a genomic explanation for the increases in their legs and toepads.

“The physical differences we see in the urban lizards appear to be mirrored at the genomic level,” says Winchell. “If urban populations are evolving with parallel physical and genomic changes, we may even be able to predict how populations will respond to urbanization just by looking at genetic markers.”

“Understanding how animals adapt to urban environments can help us focus our conservation efforts on the species that need it the most, and even build urban environments in ways that maintain all species,” adds Winchell.

Do the differences in urban lizards apply to people living in cities? Not necessarily, according to Winchell, as humans aren’t at the whim of predators like lizards are. But humans are subject to some of the same urban factors, including pollution and higher temperatures, that seem to be contributing to adaptation in other species.

Additional study authors are from Princeton University; Washington University in St. Louis; the University of Massachusetts Boston and Universidad Católica de la Santísima Concepción in Chile; Virginia Commonwealth University; and Rutgers University-Camden. The research had funding in part from the National Science Foundation, and from the University of Massachusetts Boston Bollinger Memorial Research Grant.

Source: NYU